Overview

Role: Programmer/Technical Artist

Tools Used: Unity3D, Autodesk Maya, Adobe Photoshop

Collaborators: Yujin Ariza, Qiqi Feng, Bryan Kim, Ridima Ramesh, Erhan Qu

Awards: Intel University Games Showcase @ GDC Finalist (2019)

Intent

The purpose behind Pupil: Augmented Learning was to explore how wearable augmented reality technology can be used for classroom-based learning in ways that take advantage of the unique affordances of AR. We were tasked with creating 3-4 proof-of-concept prototypes that have different forms of learner-content, learner-learner, and learner-instructor interactions. While the context of these prototypes is educational, the focus of what we delivered was not on the pedagogical content but the gestural interactions we made for each and the design patterns from across our prototypes.

The significant deliverable from this project was our design documentation, which contains detailed design and technical descriptions about our prototypes.

Process

Here, I will be talking about my individual contributions to the Pupil project. The comprehensive documentation for this project is available on our project website.

ArUco Marker Tracking

We originally intended to use markers for our 2D Geometry demo, where students construct two-dimensional shapes using these markers as vertex points and the shape's interior angles each appear to inform the student about angle properties. My first approach for implementing this was to use the Vuforia SDK, but I then discovered that Vuforia was not compatible with the ZED Mini camera we used for our custom headset.

I was able to achieve marker tracking with the OpenCV for Unity package. The ZED SDK renders the camera view by applying the camera view on to a RenderTexture that is displayed on a quad GameObject in front of each eye's camera. So my initial approach was to have OpenCV search this RenderTexture view for the markers. However, this caused massive drops in frame rate because the RenderTexture had to be copied from the GPU to the CPU in order for OpenCV to do this (this issue was made worse with larger display sizes). Not only that, but this approach yielded inaccurate tracking as seen below.

Initial test for the OpenCV + ZED marker tracking

After this test, we realized that before ZED creates the RenderTexture, it captures the camera view as a matrix of the image pixel data. By feeding this matrix directly into OpenCV instead, we completely bypass the need to copy data from the GPU and fix our original frame rate issue. Although in the end we didn't use this feature for the 2D Geometry demo, we did use it in our other demos for object anchoring and network syncing.

The final iteration of image tracking

Block Stacking

The early demo interaction for block stacking features two blocks and a white pedestal. The pedestal manages a stack of individual block objects. The user can create a stack by grabbing a cube and dragging it over to the pedestal (or the top of the current stack). A “ghost” version of the block will appear when the block is close enough to the top of the stack to indicate that it is ready to be placed. If the user lets go of the block at this moment, it will snap to place at the top of the stack in the same location as the ghost cube. This also makes the current block the new top of the stack, and locks the lower blocks in the stack in place.

This mechanic was more experimental when it came to presenting layered information, since blocks are not always necessarily stacked in a particular order. Ultimately from playtesting, we determined this was not the best way to present mainly layered content, but it was still a mechanic we decided was worth using for other purposes.

The initial block stack mechanic demo test

Atom Spawning + Bonding

Our third prototype is a collaborative chemistry demo where students can construct simple molecules with atoms. This prototype remains a proof-of-concept even in its final iteration, as we limited the demo to only include Hydrogen, Oxygen, and Carbon atoms to keep the project within scope. Initially, the atoms pre-spawned within the work space, but this would often become disorganized very quickly and guests would struggle to identify atoms if they had been moved from their labeled spawn locations. So to solve this issue, I implemented a spawn menu attached to the user's hand. This menu introduced a new unique gesture to our demo and allows for quick reference/access to atoms when needed.

Product

Our main deliverable for this project was our design documentation, which is available on our project website. This documentation is intended to serve as a reference for other AR developers creating similarly gesture-based interactive experiences. The project has ended development, but is permanently installed at the Children's Museum in Pittsburgh, PA.

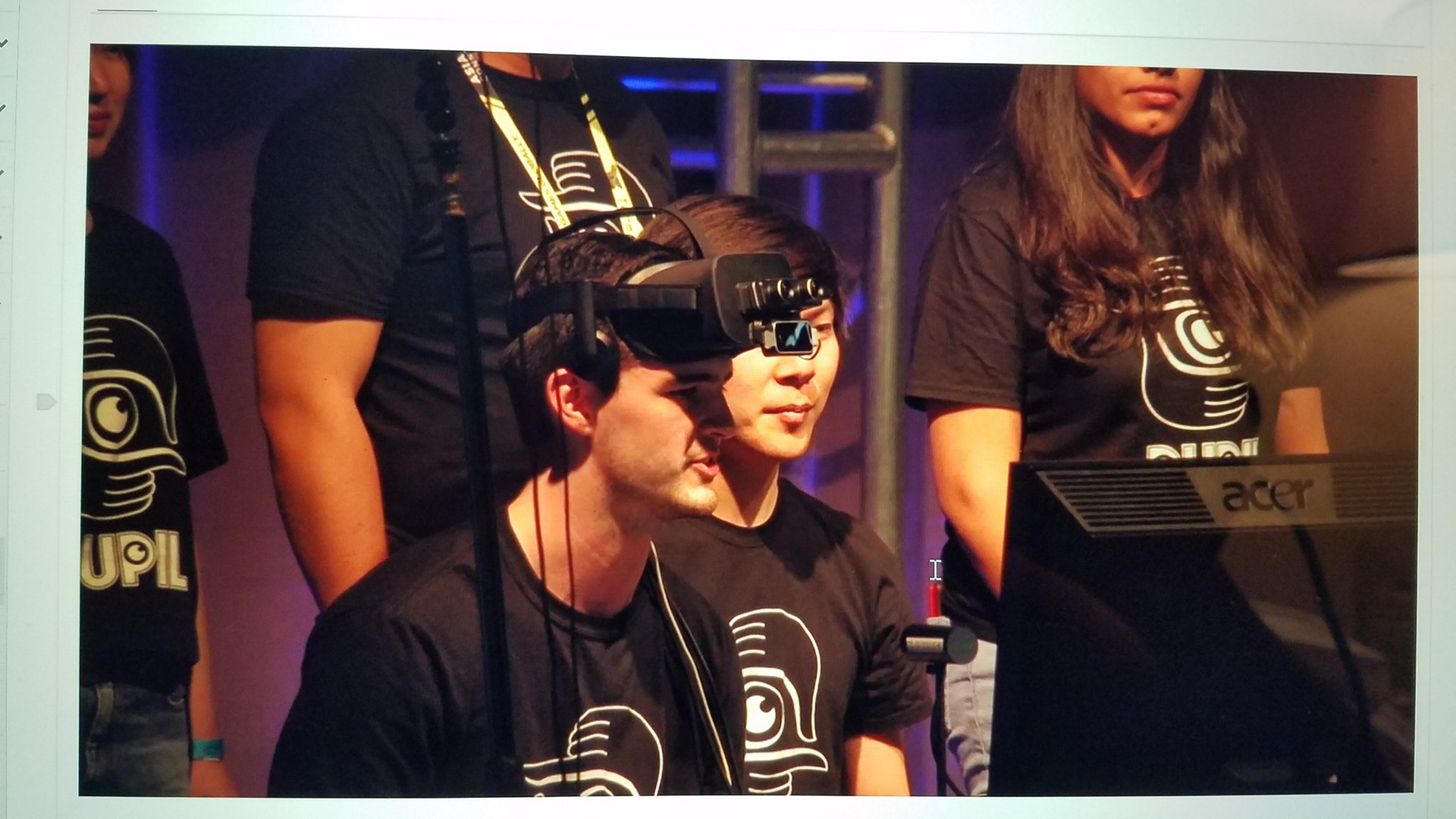

Pupil was nominated as a finalist for the 2019 Intel University Game Showcase. We demonstrated the project at GDC in San Francisco, both at a booth in-person and over livestream later on at the event.

Pupil was presented at the ED Games Expo 2019 at the Kennedy Center in Washington, DC. The Expo showcases educational learning games and technologies and features many game, VR, and AR-related projects.

Demoing Pupil to elementary school students at the 2019 ED Games Expo